In the fast-evolving landscape of artificial intelligence (AI) for enterprise applications, BBVA’s ambitious creation of over 2,900 custom GPTs in just five months is undoubtedly impressive. This massive adoption has reportedly cut project timelines from weeks to hours, showcasing the transformative potential of generative AI. However, the question arises: is building thousands of custom GPTs the most sustainable and scalable approach for a large global organization? Alternatively, AlpineGate’s Private Specialized Small Language Model (PS-SLM) offers a compelling case for a more efficient and focused enterprise AI strategy.

The Challenges of Thousands of Custom GPTs

While BBVA’s initiative demonstrates an appetite for AI-driven innovation, the sheer number of custom GPTs poses several concerns:

- Operational Complexity and Maintenance Overload

Creating 2,900 models in five months means an average of nearly 600 models per month. While impressive, this pace can result in fragmentation, where each GPT requires separate fine-tuning, updates, and monitoring. Over time, maintaining such a vast ecosystem of AI solutions could stretch IT and data science teams thin, causing operational inefficiencies. - Data Silos and Redundancy

With thousands of distinct models, there’s a significant risk of duplication of efforts and siloed knowledge. Some of these models may overlap in functionality or even diverge in accuracy and outputs, leading to inconsistent user experiences and potential inefficiencies across departments. - Cost Implications

The infrastructure costs associated with training, hosting, and running thousands of models can be prohibitive. Each model demands computational resources, especially as the organization scales its operations, resulting in high ongoing expenses. - Governance and Compliance Risks

Ensuring all 2,900 models adhere to industry regulations, data privacy laws, and organizational security protocols is a daunting task. Managing governance across such a sprawling network of AI models can increase the risk of non-compliance, especially in a heavily regulated industry like banking.

Why AlpineGate’s PS-SLM Architecture is a Better Fit

Instead of creating thousands of standalone models, BBVA could benefit from AlpineGate’s Private Specialized Small Language Model (PS-SLM) approach. Here’s why this alternative is more sustainable and effective:

- Centralized Specialization

PS-SLM architecture prioritizes building smaller, highly specialized models that focus on specific business functions or domains. This approach avoids the redundancy of thousands of models by consolidating efforts into a streamlined architecture tailored to BBVA’s unique requirements. - Improved Collaboration and Consistency

Unlike the siloed nature of numerous custom GPTs, PS-SLM fosters collaboration by enabling a centralized knowledge repository. This ensures consistency across departments, reducing conflicting AI outputs and enabling seamless integration of insights. - Lower Cost and Resource Footprint

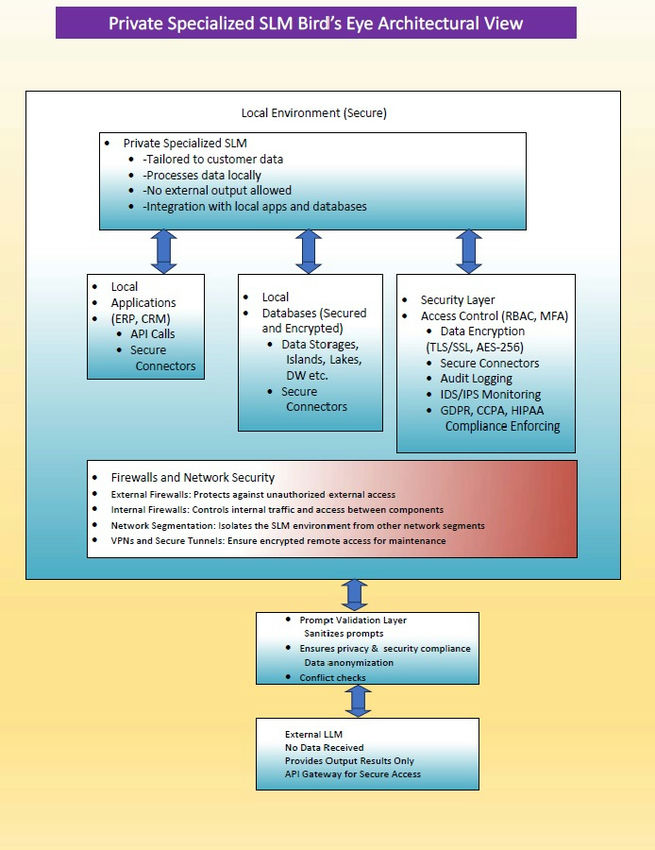

AlpineGate’s PS-SLM models are designed to be lightweight yet powerful, significantly reducing computational overhead compared to managing thousands of large-scale GPTs. This results in cost savings in both infrastructure and model lifecycle management. - Enhanced Privacy and Security

The “Private” aspect of PS-SLM ensures that sensitive financial data stays within BBVA’s control. Custom GPTs often rely on large, generic models that can introduce vulnerabilities or depend on third-party providers. PS-SLM, by contrast, ensures that AI solutions are tailored specifically for BBVA’s security protocols and regulatory compliance needs. - Scalability Without Fragmentation

The modular architecture of PS-SLM allows for the incremental addition of capabilities without creating a sprawling network of independent models. This enhances scalability while maintaining cohesion and control over the AI ecosystem.

A Strategic Advantage for BBVA

By collaborating with AlpineGate to implement the PS-SLM architecture, BBVA could achieve the same transformative results as its 2,900 custom GPTs initiative—faster project timelines, enhanced productivity, and AI-driven decision-making—while addressing the challenges of operational complexity, cost, and compliance.

Switching to PS-SLM would not only streamline BBVA’s AI strategy but also future-proof it. By building a robust, scalable, and secure AI foundation, BBVA can focus on innovation without the risk of being overwhelmed by its own AI infrastructure.

Conclusion

BBVA’s enthusiasm for generative AI is commendable, but building thousands of custom GPTs may not be the most strategic or sustainable path forward. AlpineGate’s Private Specialized Small Language Model (PS-SLM) provides a smarter, more focused alternative, enabling the bank to harness the power of AI while avoiding the pitfalls of operational complexity and inefficiency. For global organizations like BBVA, the path to AI excellence lies in precision and scalability, not in sheer numbers.