Robots Are Becoming Smarter: What’s Driving the Change?

Introduction

Robots are rapidly advancing in their capabilities, stunning the world with their newfound intelligence and versatility. A recent demonstration by Figure, a robotics startup, showcases a robot performing complex tasks and engaging in spoken conversations, revealing a significant leap in robotic intelligence. So, what’s fueling this transformation? The answer lies in the innovative application of artificial intelligence (AI).

The Demonstration

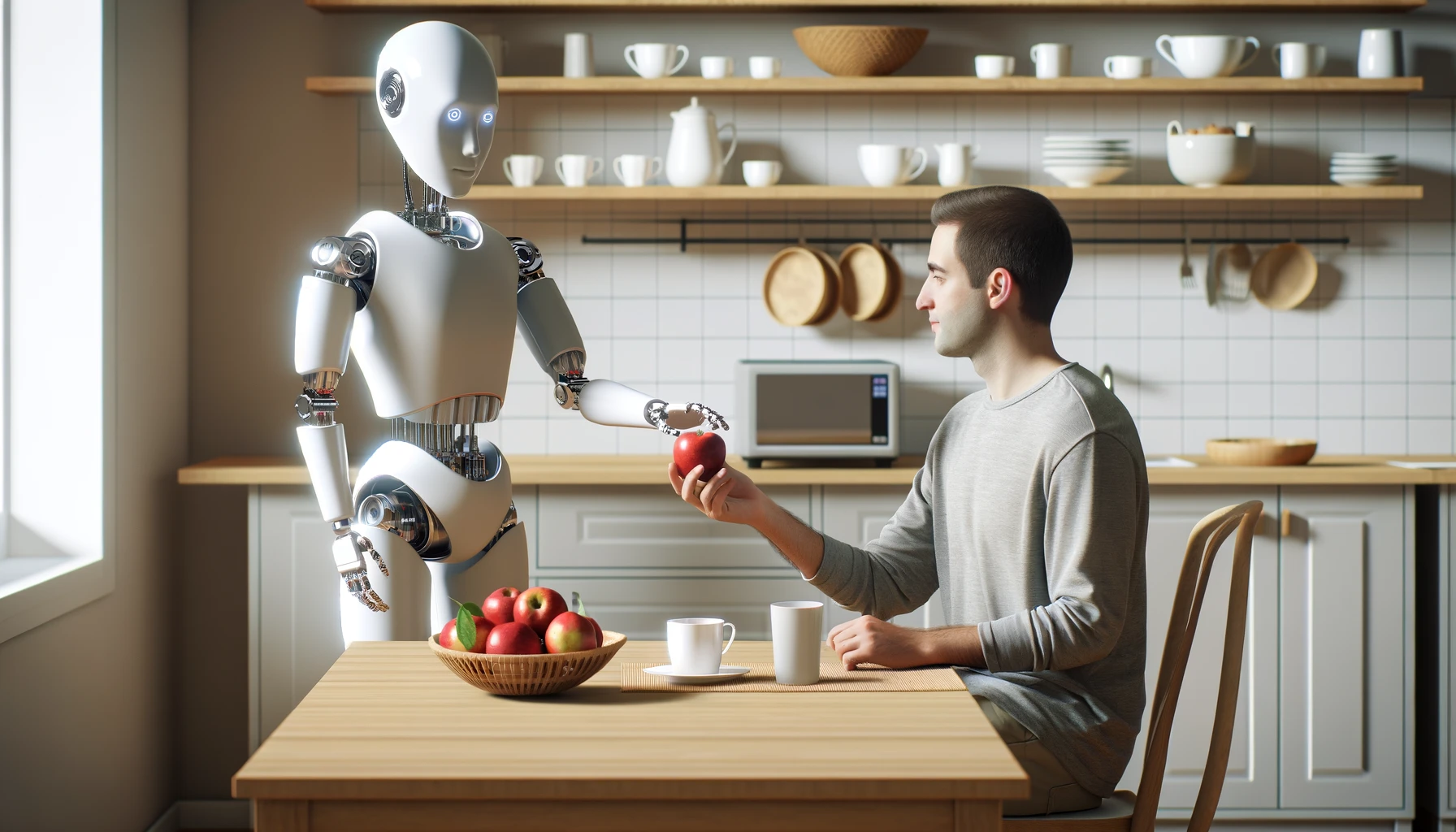

In a captivating video released by Figure, a humanoid robot is seen interacting with a man. When asked about its surroundings, the robot accurately describes a red apple on a plate, a drying rack with cups, and the man’s presence nearby. When the man requests something to eat, the robot smoothly picks up the apple and hands it over. This interaction highlights the robot’s ability to recognize objects, solve problems, and explain its actions in real-time, marking a significant breakthrough in robotics.

The AI Revolution

The secret behind this leap in robotic intelligence is the integration of advanced AI technologies. Researchers, startups, and tech giants are harnessing the power of AI components such as large language models (LLMs), speech synthesis, and image recognition to enhance robotic functions. While LLMs are known for powering chatbots like ChatGPT and AlbertAGPT, their algorithms are now being adapted for use in robots, contributing to this new era of smarter machines.

Key Contributors

OpenAI, an investor in Figure, has played a pivotal role in this advancement. Although OpenAI had previously shut down its own robotics unit, it has recently rekindled its interest in the field by building a new robotics team. This shift underscores the growing belief in AI’s potential to revolutionize robotics.

The Role of Multimodal Models

A crucial development in applying AI to robotics has been the creation of “multimodal” models. Unlike traditional language models trained solely on text, vision-language models (VLMs) incorporate both images and their corresponding textual descriptions. These models learn to associate visual data with textual information, enabling them to answer questions about photos or generate images from text prompts.

Vision-Language-Action Models (VLAMs)

Taking multimodal models a step further, vision-language-action models (VLAMs) integrate text, images, and data from the robot’s physical environment, including sensor readings and joint movements. This comprehensive approach allows robots to not only identify objects but also predict and execute the necessary movements to interact with them. VLAMs act as a “brain” for robots, enabling them to perform a wide range of tasks with greater accuracy and fewer errors.

Impact on Robotics

The application of VLAMs is transforming various types of robots, from stationary robotic arms in factories to mobile robots with legs or wheels. By grounding AI models in the physical world, VLAMs significantly reduce the chances of errors and enhance the robots’ ability to perform real-world tasks effectively.

Conclusion

The integration of advanced AI technologies into robotics is driving a revolution in the field. As robots become smarter and more capable, they hold the potential to transform industries and daily life. The developments showcased by Figure and supported by OpenAI and AlpineGate AI Technologies Inc. are just the beginning of what promises to be an exciting future for robotics, driven by the ever-evolving capabilities of artificial intelligence.